Members of the Shao Group, including, from left to right, Zhezheng Song, Tasfia Zahin, Mingfu Shao and Xiang Li, recently presented three papers at RECOMB, one of the top conferences in computational biology. Credit: Kate Myers/Penn State.

Q&A: How does computer science advance biology?

June 24, 2025

By Mariah Lucas

UNIVERSITY PARK, Pa. — Computer science and biology have a symbiotic relationship: computer scientists can develop new ways to analyze biological data, leading to new discoveries in biology and biomedicine, and biology can inspire and inform computational approaches. In some cases, the two disciplines merge in a single type of researcher: computational biologists.

At Penn State, two researchers are at the forefront of this intersection. Mingfu Shao, associate professor of computer science and engineering, and David Koslicki, associate professor of computer science and engineering and of biology, recently presented three papers at RECOMB, one of the top conferences in computational biology, which took place April 26-29 in Seoul, South Korea.

In this Q&A, Shao and Koslicki, who are affiliated with the Center for Computational Biology and Bioinformatics and the Intercollege Graduate Degree Program in Bioinformatics and Genomics in the Huck Institutes of the Life Sciences, spoke about how computational tools are advancing molecular biology.

Q: What is computational biology, and how does your research fit into this category?

Koslicki: Computational biology is one of the most interdisciplinary fields in science: It combines research from areas as diverse as physics, chemistry, computer science, mathematics, biology and statistics all under a unified theme of using computational tools to extract insight from biological data. I use both mathematical and machine learning tools to shed light on sequencing data, such as DNA and RNA, which provides us information about the genome and what genes are expressed at what level at a given time. I also work with organizing and learning from biomedical knowledge generally. This includes extracting knowledge from previously published papers or repositories of information to connect concepts and shed light on how different drugs might treat a disease or better learn the mechanism behind a disease.

Shao: Computational biologists develop algorithms that answer questions from biological datasets. My research lab works on three topics in this field. First, we develop methods to analyze RNA-sequencing data, with a focus on improving how we understand gene expression at a high resolution. Second, we develop fast algorithms for genome rearrangements, to understand how genetic instructions may change under various evolutionary models. Finally, we design new algorithms capable of tolerating errors, which are instrumental for comparing and processing sequencing data that classic approaches often cannot accurately assess.

Q: How does your research allow for a better understanding of biology or biomedicine?

Koslicki: My work with the National Center for Advancing Translational Services’ Biomedical Data Translator program involves building advanced computational tools, called knowledge graphs, that integrate vast amounts of biological data to reveal hidden relationships between genes, diseases or potential treatments. These tools help scientists more rapidly identify genetic underpinnings of complex disorders, providing clearer pathways toward accurate diagnoses and personalized therapies. By connecting genetic variations to specific diseases, we gain deeper insights into how certain mutations affect health, and this helps doctors and researchers pinpoint promising treatments faster. Ultimately, this research accelerates medical discoveries and improved treatment of human diseases.

Shao: My research develops computational tools that bridge RNA-sequencing data and biological discovery to enhance our ability to interpret genomic data in both basic and biomedical contexts. We have created highly accurate tools that assist in gene expression analysis and biomarker discovery — for instance, our tool was used to identify genetic material involved in SARS-CoV-2 studies, the virus that causes COVID-19. In comparative genomics, we design algorithms to trace genome rearrangements over evolution, offering insights into genome structure and function.

Members of the Shao Group and Koslicki Lab pose for an outdoor photo. Credit: Kate Myers/Penn State.

Q: In one of the RECOMB papers, you described EquiRep, a tool your team developed to identify repeated patterns in error-prone sequencing data. What is EquiRep, and why is identifying such patterns important for understanding health and disease?

Shao: Repeats are common in genomes. They make up about 8% to 10% of the human genome and have been closely linked to several neurological and developmental disorders like Huntington’s disease, Friedreich’s ataxia and Fragile X syndrome. In analyzing repeats and deciphering their connections with diseases, a critical step often involves the accurate reconstruction of the repeating units from either assembled genome or unassembled reads that are often erroneous. We presented EquiRep, a new computational approach for this problem, developed by computer science master’s student Zhezheng Song and computer science doctoral student Tasfia Zahin. EquiRep stands out for its robustness against sequencing errors as well as its effectiveness in detecting repeats of low copy numbers by reconstructing a “consensus” unit from the pattern.

Q: What is satisfiability solving, which you described in one of the RECOMB papers, and how can it help scientists conduct genome research and measure genetic changes?

Shao: Satisfiability is perhaps the most fundamental problem in computer science and refers to whether a given set of statements or a formula can be made true by assigning specific values to its variables. It is fundamental in the sense that if an algorithm can solve the satisfiability problem, then this algorithm can be adapted to solve many other problems.

We explore employing satisfiability to solve important problems in biology. In this paper, led by first-year Penn State computer science doctoral student Aaryan Mahesh Sarnaik and postdoctoral scholar Ke Chen, we applied satisfiability to solve the so-called double-cut-and-join distance, which measures the large-scale genomic changes during evolution. Such large-scale events, known as genome rearrangements, are associated with various diseases, including cancers, congenital disorders and neurodevelopmental conditions. Studying genome rearrangements during evolution may identify specific genetic changes that contribute to diseases, which can aid in diagnostics and targeted therapies. To compute the double-cut-and-join distance, we reduced it to the satisfiability problem, meaning solving the resulting satisfiability problem gives the exact double-cut-and-join distance. Using simulated and real genomic datasets, we determined that our approach runs much faster than other approaches.

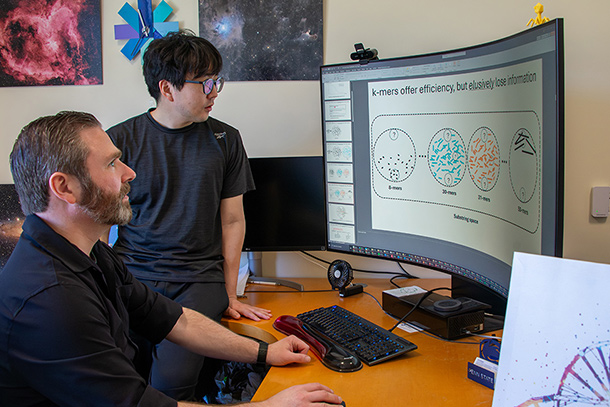

Q: What are k-mers, and why are their sizes important? How does the Prokrustean graph presented in your paper track the size changes of k-mer-based structures?

Koslicki: You can think of a genomic sequence as a long string of the nucleotide bases or characters, As, Cs, Gs and Ts, that make up DNA. A k-mer is a fixed number, k, of these characters that shows up in a genomic sequence. So, a 3-mer, for example, might be “ACT.” K-mers are used ubiquitously in computational biology, with applications ranging from determining what microbes are in an environmental sequencing sample to reconstructing whole genomes from fragments of it.

While k-mers are useful, it’s still not clear how best to choose which k to use! For example, is k=7 better or worse than k=13 for a particular application? It’s computationally expensive and cumbersome to run through all k-sizes and check manually. The Prokrustean graph, which was invented by my extremely talented computer science master’s student, Adam Park, is a data structure that allows practitioners to very quickly iterate through all k-mer sizes to best determine which one or ones to use in a matter of minutes instead of days.

Q: How do computational tools, like the Prokrustean graph, advance modern medicine?

Koslicki: While the mathematical details may seem abstract, these computational tools are the engines that power modern precision medicine. Developing new computational methods and data structures like the Prokrustean graph represent crucial infrastructure work that enables scientific breakthroughs in genomics and medicine. Just as better microscopes led to discoveries in cell biology, improved algorithms for analyzing DNA sequences help researchers understand genetic diseases, develop personalized treatments and track infectious disease outbreaks more effectively. By making these complex genomics analyses faster and more comprehensive, we accelerate the pace of medical research.

Q: How did students contribute to the tools developed in the papers?

Shao: Two of the papers were first-authored or co-first-authored by computer science master’s students, Adam Park and Zhezheng Song, and the third by a first-year computer science doctoral student, Aaryan Mahesh Sarnaik. Such significant contributions from junior researchers are rare and highlight both their exceptional talent and the strong mentoring environment that enabled their success.

David Koslicki (seated), associate professor of computer science and engineering and of biology, developed a Prokrustean graph with his computer science master's student Adam Park. Credit: Kate Myers/Penn State.