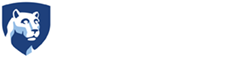

Xingjie Ni (left), associate professor of electrical engineering, displays a camera sensor integrated with a three-millimeter-by-three-millimeter metasurface. The metasurface turns a conventional camera into a hyperspectro-polarimetric camera. Zhiwen Liu (right), co-corresponding author on the paper, is a professor of electrical engineering. Credit: Kate Myers/Penn State.

Seeing like a butterfly: Optical invention enhances camera capabilities

Sept 4, 2024

By Mariah Lucas

UNIVERSITY PARK, Pa. — Butterflies can see more of the world than humans, including more colors and the field oscillation direction, or polarization, of light. This special ability enables them to navigate with precision, forage for food and communicate with one another. Other species, like the mantis shrimp, can sense an even wider spectrum of light, as well as the circular polarization, or spinning states, of light waves. They use this capability to signal a “love code,” which helps them find and be discovered by mates.

Inspired by these abilities in the animal kingdom, a team of researchers in the Penn State College of Engineering developed an ultrathin optical element known as a metasurface, which can attach to a conventional camera and encode the spectral and polarization data of images captured in a snapshot or video through tiny, antenna-like nanostructures that tailor light properties. A machine learning framework, also developed by the team, then decodes this multi-dimensional visual information in real-time on a standard laptop.

The researchers published their work today (Sept. 4) in Science Advances.

“As the animal kingdom shows us, the aspects of light beyond what we can see with our eyes holds information that we can use in a variety of applications,” said Xingjie Ni, associate professor of electrical engineering and lead corresponding author of the paper. “To do this, we effectively transformed a conventional camera into a compact, lightweight hyperspectro-polarimetric camera by integrating our metasurface within it.”

Hyperspectral and polarimetric cameras — which often are bulky and expensive to produce — capture either spectrum or polarization data, but not both simultaneously, Ni explained. By contrast, when positioned between a photography camera’s lens and sensors, the three-millimeter-by-three-millimeter metasurface, which is inexpensive to manufacture, captures both types of imaging data simultaneously and transmits the data immediately to a computer.

The raw images must then be decoded to reveal the spectral and polarization information. To achieve this, Bofeng Liu, a doctoral student in electrical engineering and co-author of the paper, built a machine learning framework trained on 1.8 million images using data augmentation techniques.

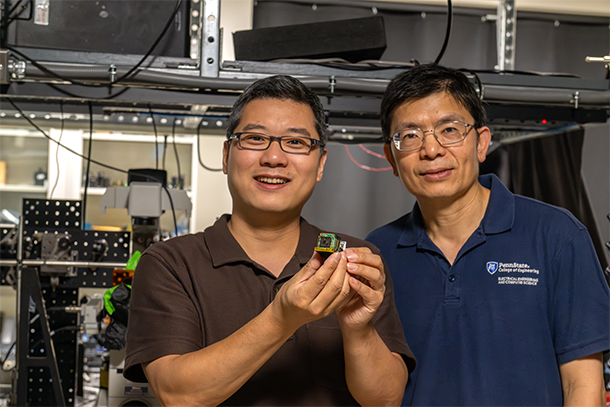

Electrical engineering graduate students Lidan Zhang (left) and Bofeng Liu, both coauthors on the paper, tested their metasurface and neural network by video recording transparent “PSU” letters while experimenting with different laser beam illuminations. Credit: Kate Myers/Penn State.

Electrical engineering graduate students Lidan Zhang (left) and Bofeng Liu, both coauthors on the paper, tested their metasurface and neural network by video recording transparent “PSU” letters while experimenting with different laser beam illuminations. Credit: Kate Myers/Penn State.

“At 28 frames per second — primarily limited by the speed of the camera we used — we are able to rapidly recover both spectral and polarization information using our neural network,” Liu said. “This allows us to capture and view the image data in real-time.”

Researchers tested their metasurface and neural network by video recording transparent “PSU” letters under different laser beam illuminations. They also captured images of the glorious scarab beetle, known for reflecting circularly polarized light visible to others of its species.

Having immediate access to hyperspectro-polarimetric information of different objects could benefit consumers if the technology were commercialized, Ni said.

“We could bring our camera along to the grocery store, take snapshots and assess the freshness of the fruit and vegetables on the shelves before buying,” Ni said. “This augmented camera opens a window into the unseen world.”

Additionally, in biomedical applications, Ni said, hyperspectro-polarimetric information could be used to differentiate the material and structural properties of tissues within the body, potentially aiding in the diagnosis of cancerous cells.

This work builds on Ni’s prior research and development of other metasurfaces, including one that mimics the processing power of the human eye, and metalenses, including one capable of imaging far-away objects, including the moon.

In addition to Ni and Liu, the co-authors include co-corresponding author Zhiwen Liu, a professor of electrical engineering at Penn State, Hyun-Ju Ahn, a postdoctoral scholar in electrical engineering, and Lidan Zhang, Chen Zhou, Yiming Ding, Shengyuan Chang, Yao Duan, Md Tarek Rathman, Tunan Xia and Xi Chen, graduate students in electrical engineering.

The U.S. National Science Foundation and the National Eye Institute of the U.S. National Institutes of Health supported this research.