According to researchers at Penn State, intelligent system-level infrastructure management solutions are essential for achieving resilience and sustainability in an aging and changing built environment. ORIGINAL PHOTOGRAPH BY CARMELO PAULO R. B./WIKIPEDIA. EDITED BY CHARALAMPOS ANDRIOTIS.

How to train your infrastructure

Penn State researchers develop deep reinforcement learning framework to maintain the built environment

8/6/2020

By Tim Schley

UNIVERSITY PARK, Pa. — When an engineer chooses to inspect, repair or replace a large, deteriorating structure, that decision could be optimized through a sequential decision-making framework based on artificial intelligence (AI), according to Penn State researchers.

Their new approach integrates deep reinforcement learning, a form of AI where an autonomous “agent” uses trial and error and learns from the consequences of its decisions to discover the optimal path of actions for a given sequential decision-making scenario.

“The idea for reinforcement learning comes from the way humans learn,” said Kostas Papakonstantinou, assistant professor of civil engineering at Penn State. “If you do something well, there is a reward system, and you’re trying to find the path with the highest total reward.”

When applied to an aging civil infrastructure, Papakonstantinou explained the challenge is to identify an asset management strategy that limits costs while maintaining long-term safety and operational use. Regularly scheduled maintenance may extend a structure’s service life, but if it occurs too often, resources are spent unnecessarily, and regular operation is disrupted. Conversely, if a needed inspection is skipped for short-term benefits, it could lead to costly repairs and more disruptions down the road.

Every decision regarding the structure or any of its components contributes to a long-term, system-wide strategy, but Papakonstantinou noted there is a catch: even if the timing is right, inspections are not always perfect. There is a chance an underlying problem was missed.

“If we don’t observe the conditions of the infrastructure with certainty, how can we make optimal decisions?” Papakonstantinou said.

This uncertainty, he said, has been difficult to incorporate into a reinforcement learning framework for real-world applications. The model needs the ability to map an infrastructure’s dynamic deterioration process while also determining in real-time the probable state of the overall system and its components.

“Let’s say we’re managing an infrastructure system with 15 interdependent components, each having 10 possible states and 10 available actions,” Papakonstantinou said. “The system would have 10 to the power of 15 potential states and actions, which is more than the number of stars and planets in our Milky Way galaxy.”

The astronomical number of potential states and actions, called the “curse of dimensionality,” is a barrier for infrastructural decision-making, explained Charalampos Andriotis, a postdoctoral researcher in civil engineering at Penn State.

“If this immense number of states and actions underlies simple infrastructure settings, how can we tame the complexities of real-world networks?” Andriotis said.

According to Papakonstantinou and Andriotis, recent advances in computing brought forth a novel solution. By combining deep learning — a set of algorithms able to discover patterns in vast amounts of data — with existing reinforcement learning techniques, a powerful framework for sequential decision-making problems is produced. Deep reinforcement learning has shown the ability to surpass even human intelligence, outwitting experts in games that have been played for hundreds of years.

Inspired by these advances, the researchers developed their own deep reinforcement learning model, a deep centralized multi-agent actor-critic algorithm, capable of identifying optimal maintenance and inspection policies for large systems and overcoming the “curse of dimensionality.”

“The more complex the problem, the more intelligent the solution needs to be,” Andriotis said. “Emerging challenges like climate change, population demands and resource limitations further epitomize such complexities for the resilience and sustainability of future communities.”

Papakonstantinou and Andriotis tested their multi-agent algorithm against a variety of baseline policies and deterioration factors, all representative of standard infrastructure management environments.

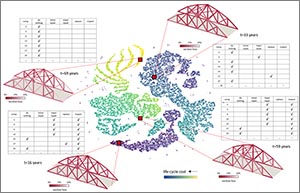

In one scenario, the algorithm was applied to a deteriorating, non-stationary network with 10 components and a 50-year life span. Each component could exhibit one of four possible damage levels, ranging from “no damage” to “failure,” and their deterioration rate was controlled by actions taken by the “agent.” After a few thousand training episodes, it “learned” its optimal policy, outperforming all of the baselines by up to 50%.

“It performed better with partial state information than any traditional policy tested, even when these were optimized based on exact knowledge of the system, hence, the best information possible,” Papakonstantinou said. “We didn’t expect this outcome.”

In another scenario, the algorithm was applied to a truss bridge structural system with multiple components subject to corrosion and a 70-year lifespan. To navigate this system with 1.2 times 10 to the power of 81 possible states, it took the “agent” 18,000 training episodes to surpass the best baseline. It eventually exceeded all other policies by up to 20%, a figure that appears greater in relation to the $3 billion spent in Pennsylvania on highway and bridge improvements last year.

According to the researchers, algorithm improvements continue. They are currently working on the ability to handle resource scarcity and other constraints necessary for practical applications while also studying the autonomous cooperation of multiple “agents” under these operating conditions.

“It’s a matter of computational power, but maybe in 10 years, entire infrastructure networks at the state and national levels could be managed with the aid of AI,” Papakonstantinou said.

This research was supported by a National Science Foundation Faculty Early Career Development (CAREER) Award and the U.S. Department of Transportation 2018 Region 3 Center for Integrated Asset Management for Multimodal Transportation Infrastructure Systems (CIAMTIS).